Python 爬虫 – Scrapy框架原理

Python 爬虫包含两个重要的部分:正则表达式和Scrapy框架的运用, 正则表达式对于所有语言都是通用的,网络上可以找到各种资源。

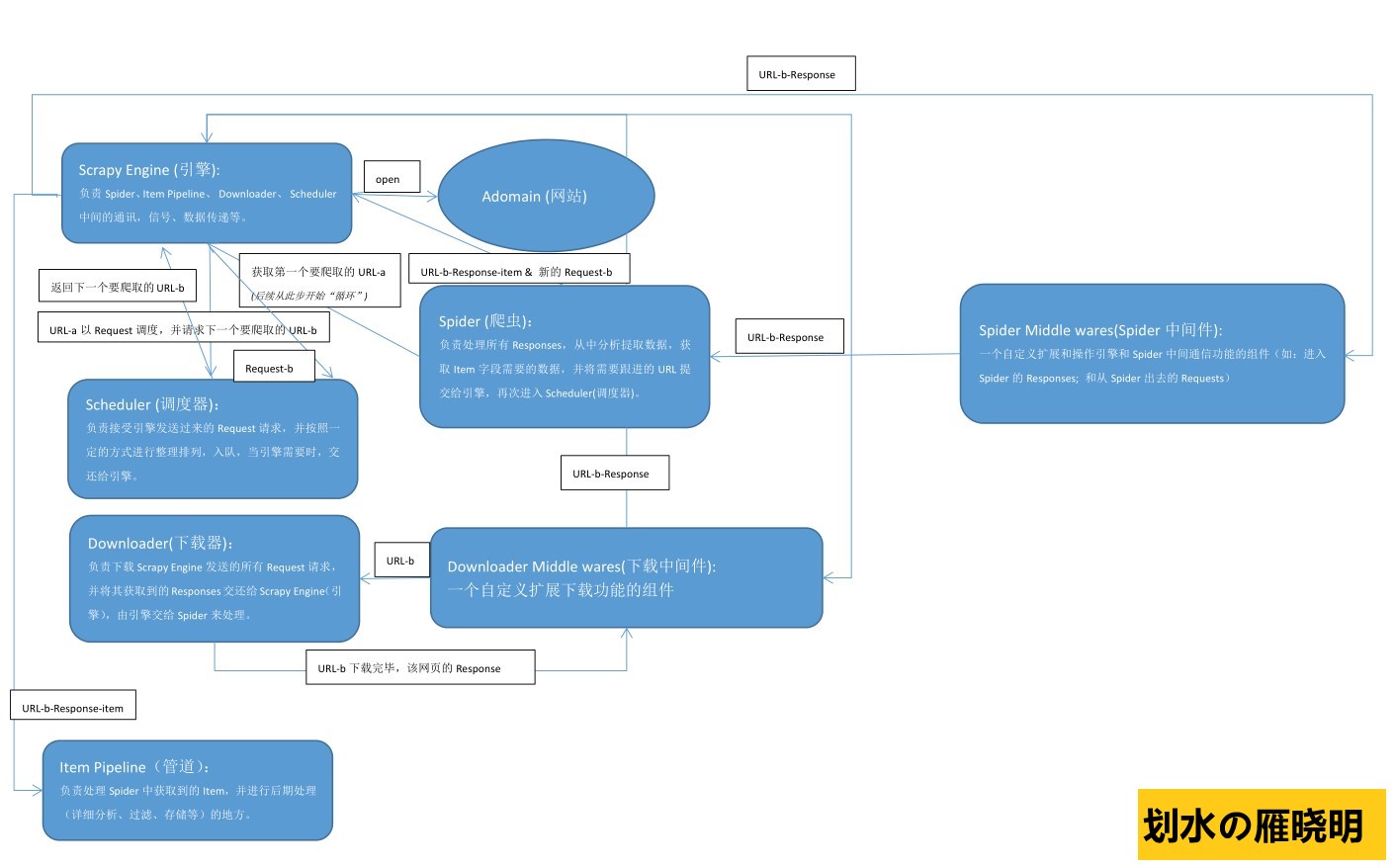

如下是手绘Scrapy框架原理图,帮助理解

如下是一段运用Scrapy创建的spider:使用了内置的crawl模板,以利用Scrapy库的CrawlSpider。相对于简单的爬取爬虫来说,Scrapy的CrawlSpider拥有一些网络爬取时可用的特殊属性和方法:

$ scrapy genspider country_or_district example.python-scrapying.com–template=crawl

运行genspider命令后,下面的代码将会在example/spiders/country_or_district.py中自动生成。

1 # -*- coding: utf-8 -*- 2 import scrapy 3 from scrapy.linkextractors import LinkExtractor 4 from scrapy.spiders import CrawlSpider, Rule 5 from example.items import CountryOrDistrictItem 6 7 8 class CountryOrDistrictSpider(CrawlSpider): 9 name = "country_or_district" 10 allowed_domains = ["example.python-scraping.com"] 11 start_urls = ["http://example.python-scraping.com/"] 12 13 rules = ( 14 Rule(LinkExtractor(allow=r"/index/", deny=r"/user/"), 15 follow=True), 16 Rule(LinkExtractor(allow=r"/view/", deny=r"/user/"), 17 callback="parse_item"), 18 ) 19 20 def parse_item(self, response): 21 item = CountryOrDistrictItem() 22 name_css = "tr#places_country_or_district__row td.w2p_fw::text" 23 item["name"] = response.css(name_css).extract() 24 pop_xpath = "//tr[@id="places_population__row"]/td[@class="w2p_fw"]/text()" 25 item["population"] = response.xpath(pop_xpath).extract() 26 return item

![Python 爬虫 - Scrapy框架原理[Python基础]](https://www.zixueka.com/wp-content/uploads/2023/10/1696934581-caeff264fbf09e6.jpg)